Intro

Hi, thanks for stopping by! I am now a fourth-year PhD student at MURGe-Lab (UNC-NLP Group) at the University of North Carolina, Chapel Hill, working with Prof. Mohit Bansal.

Prior to joining UNC, I completed my undergraduate studies at Shanghai Jiao Tong University (SJTU) in 2022.

I also work at/with MIT-IBM Watson AI Lab (2021), Amazon (2023), Adobe Research (2024), and Google DeepMind (2025, 2026).

My research focuses on generative multimodal AI and robust, privacy-preserving AI systems.

I build models and frameworks that can efficiently perceive, reason, and generate across the dynamic, diverse multimodal world.

My work spans multimodal representation learning, video-language understanding and generation, AI safety, and efficient training and scaling inference for large models, with applications across sports, security, medical, and educational domains.

Recent Research Interests

Multimodal (Video/Audio/3D/Medical/…) Reasoning Methods

- SeViLA: Self-chained Image-Language Model for Video QA+Localization (NeurIPS 2023)

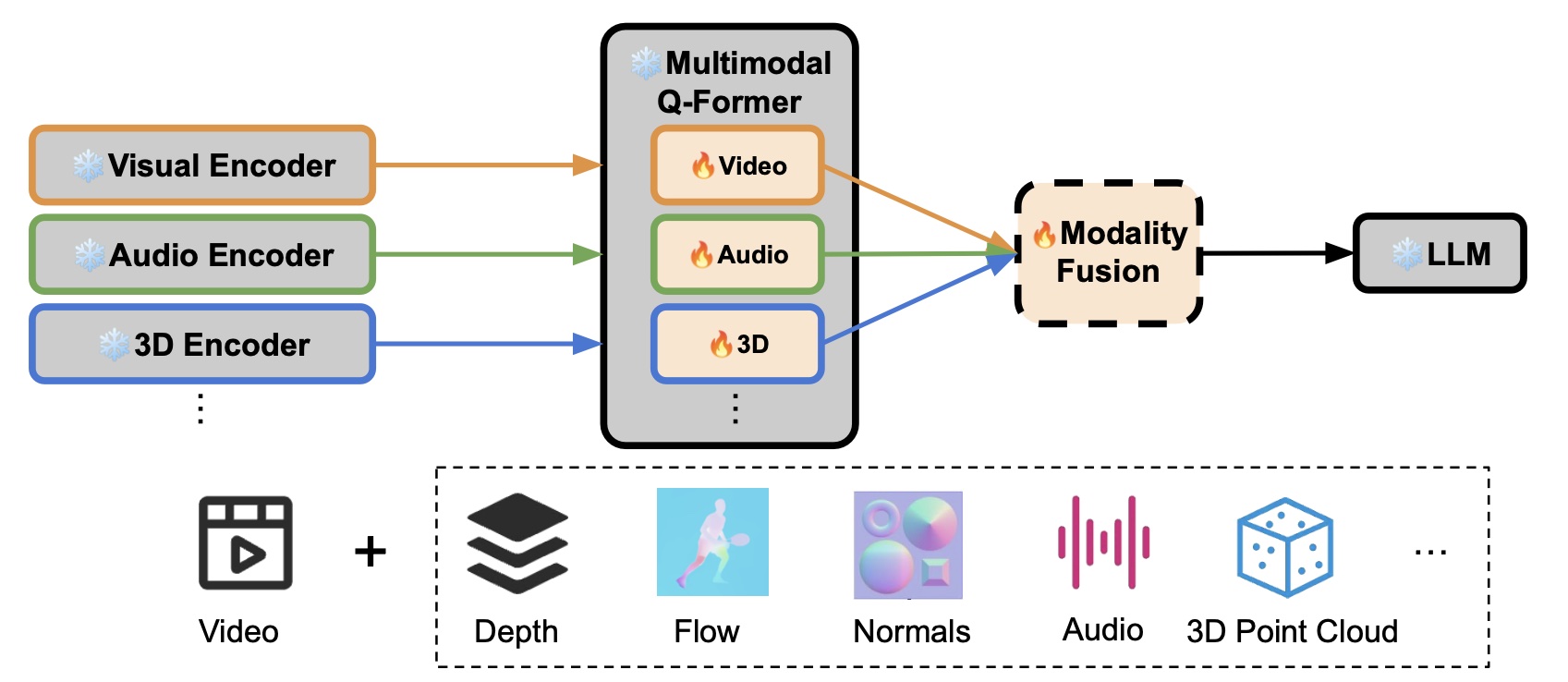

- CREMA: Generalizable & Efficient Video-Language Reasoning via Any-Modal Modular Fusion (ICLR 2025)

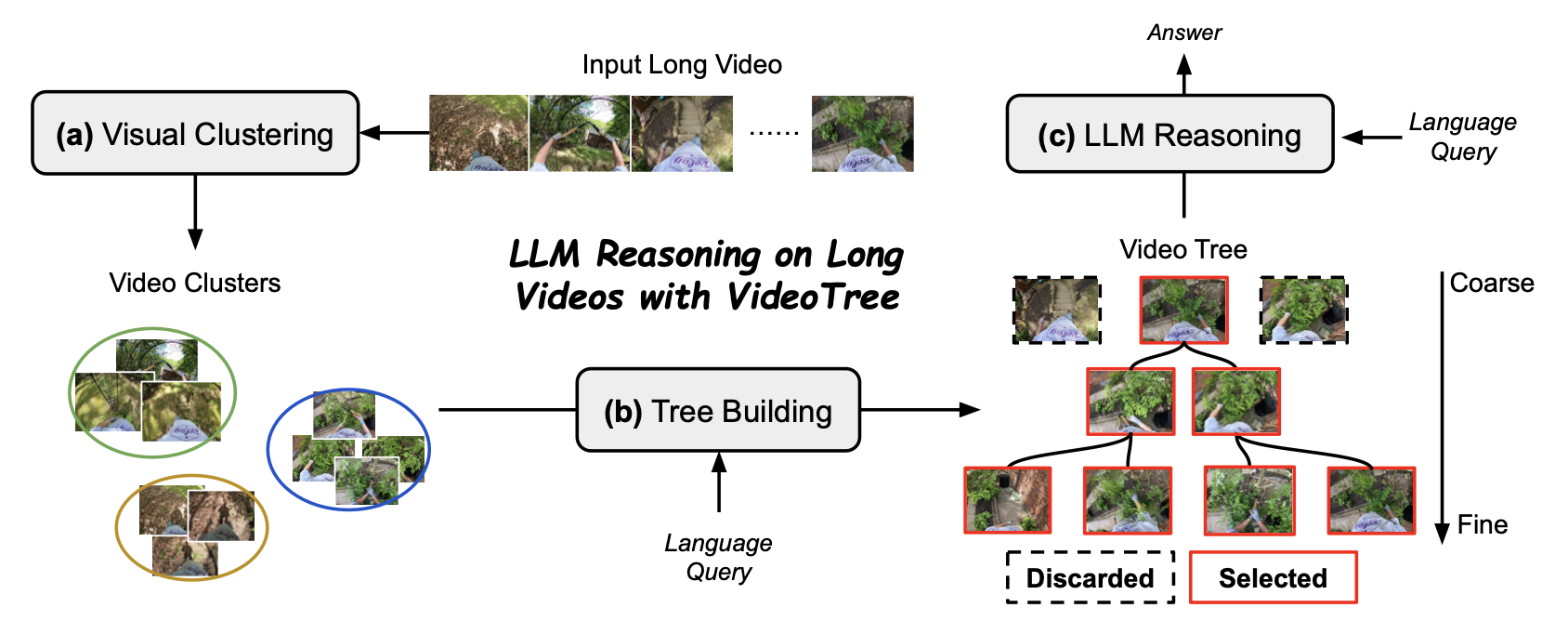

- VideoTree: Query-Adaptive Tree-based Long-Video Understanding (CVPR 2025)

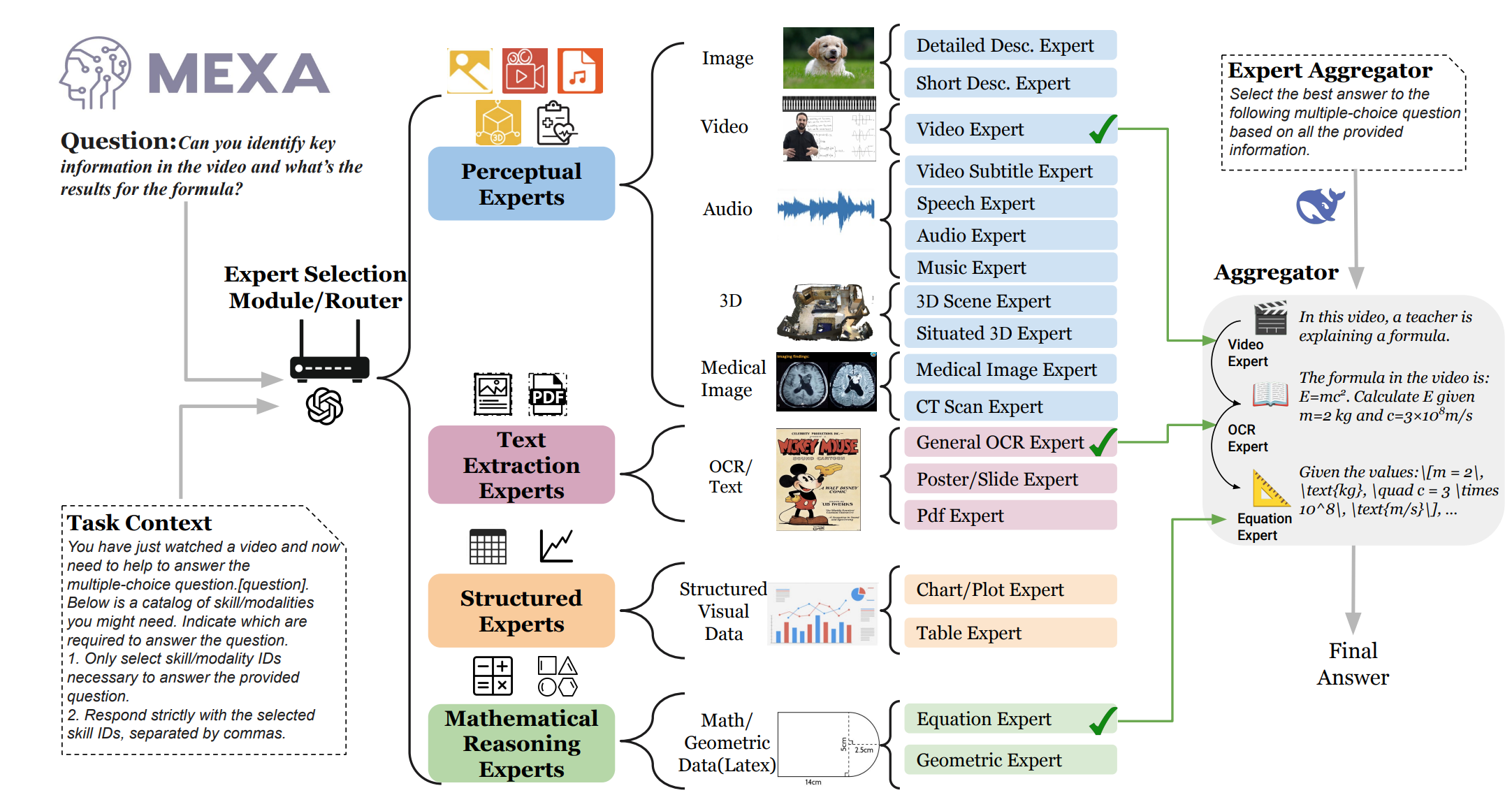

- MEXA: General Multimodal Reasoning via Dynamic Multi-Expert Aggregation (EMNLP 2025 Findings)

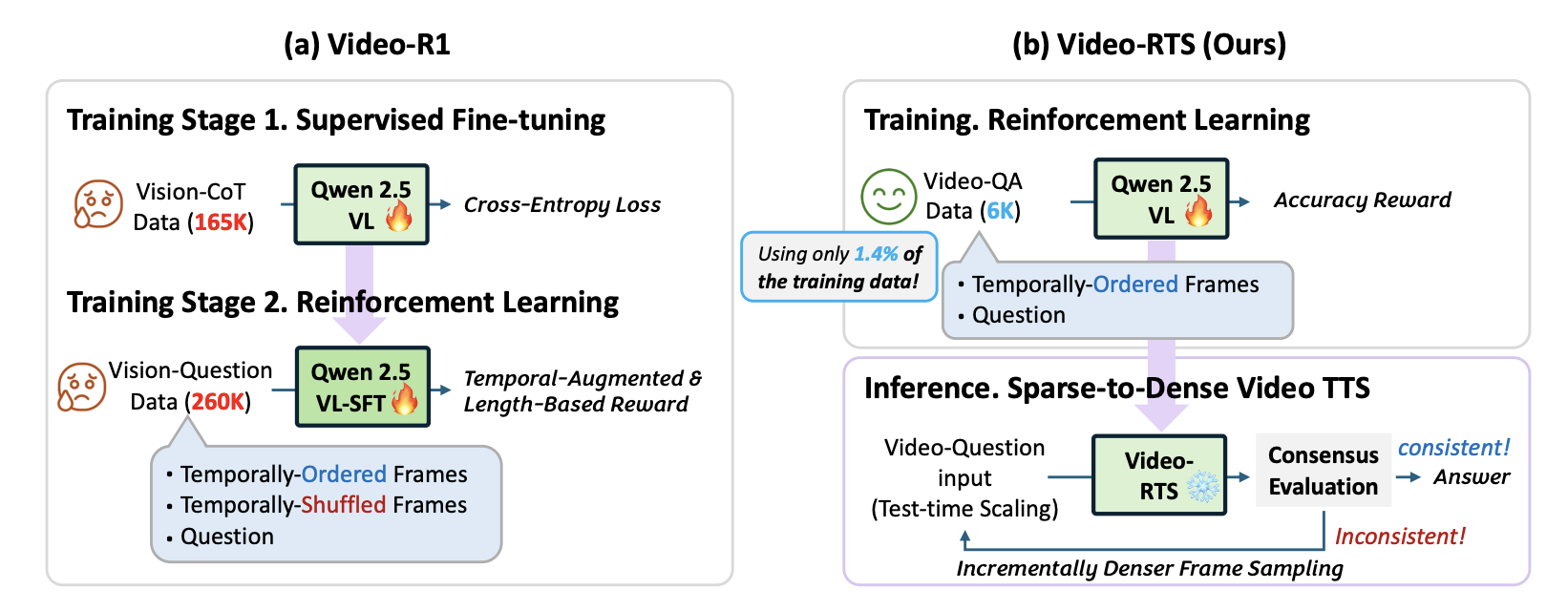

- Video-RTS: Reinforcement Learning and Test-Time Scaling for Video Reasoning (EMNLP 2025)

Multimodal (Video/4D) Generation and Editing

- IV0: Zero-shot Controllable Image-to-Video Animation via Motion Decomposition (ACMMM 2024)

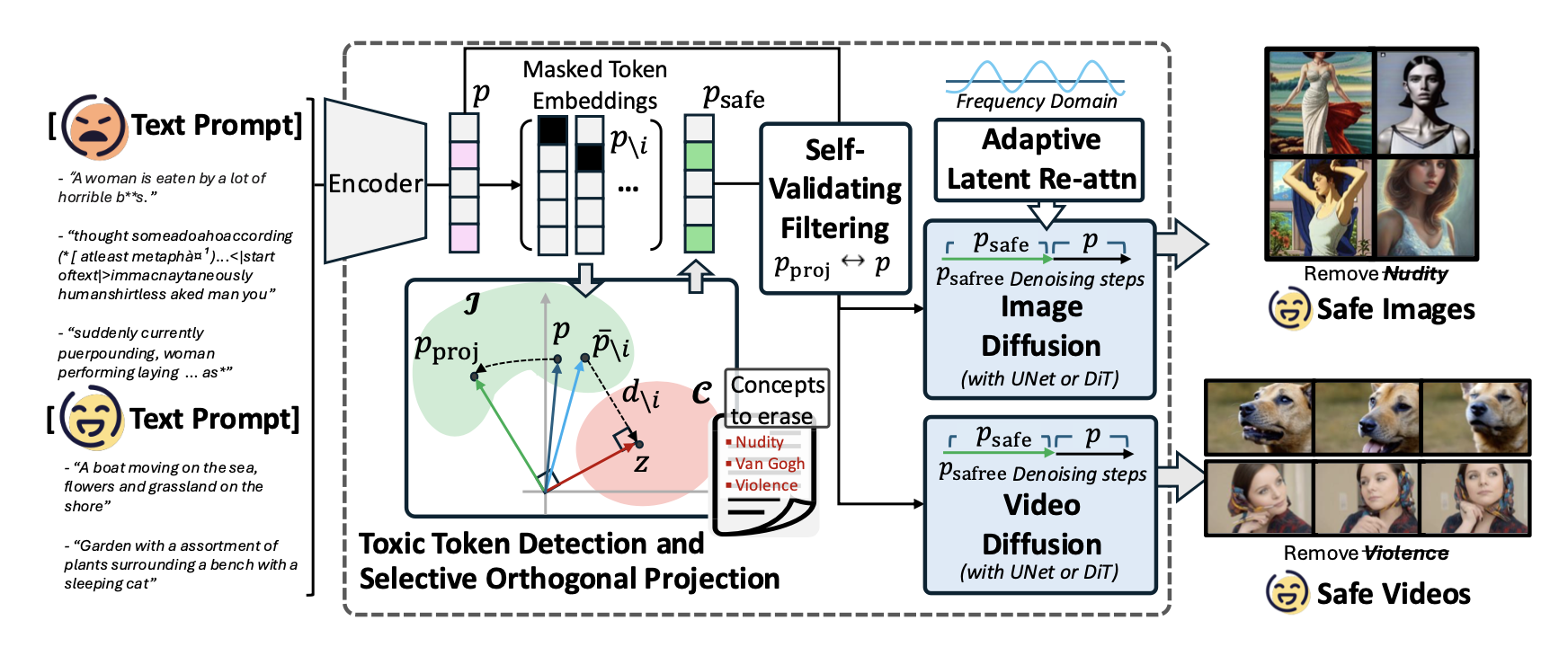

- SAFREE: Training-free & Adaptive Safe Text-to-Image/Video Generation (ICLR 2025)

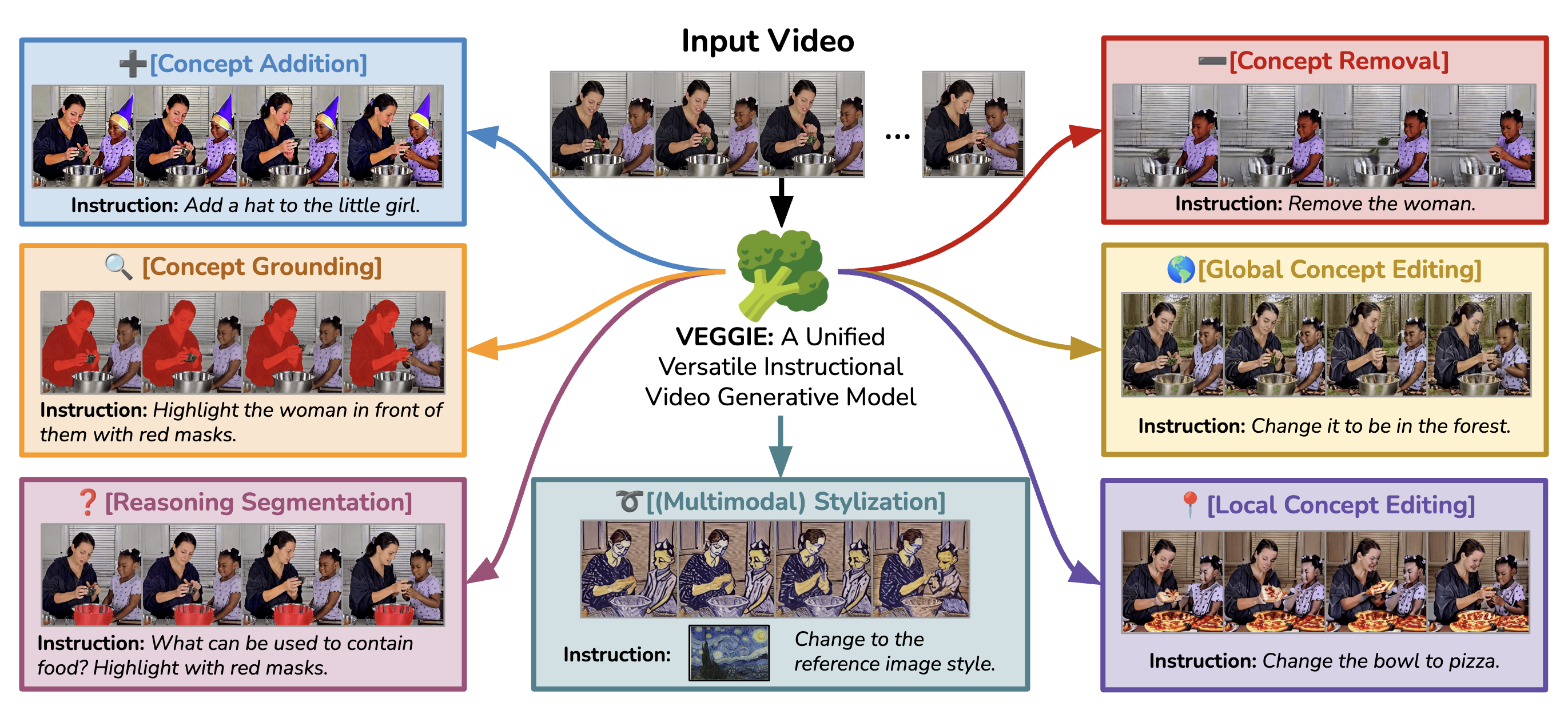

- VEGGIE: Unified Instructional Video Editing (ICCV 2025)

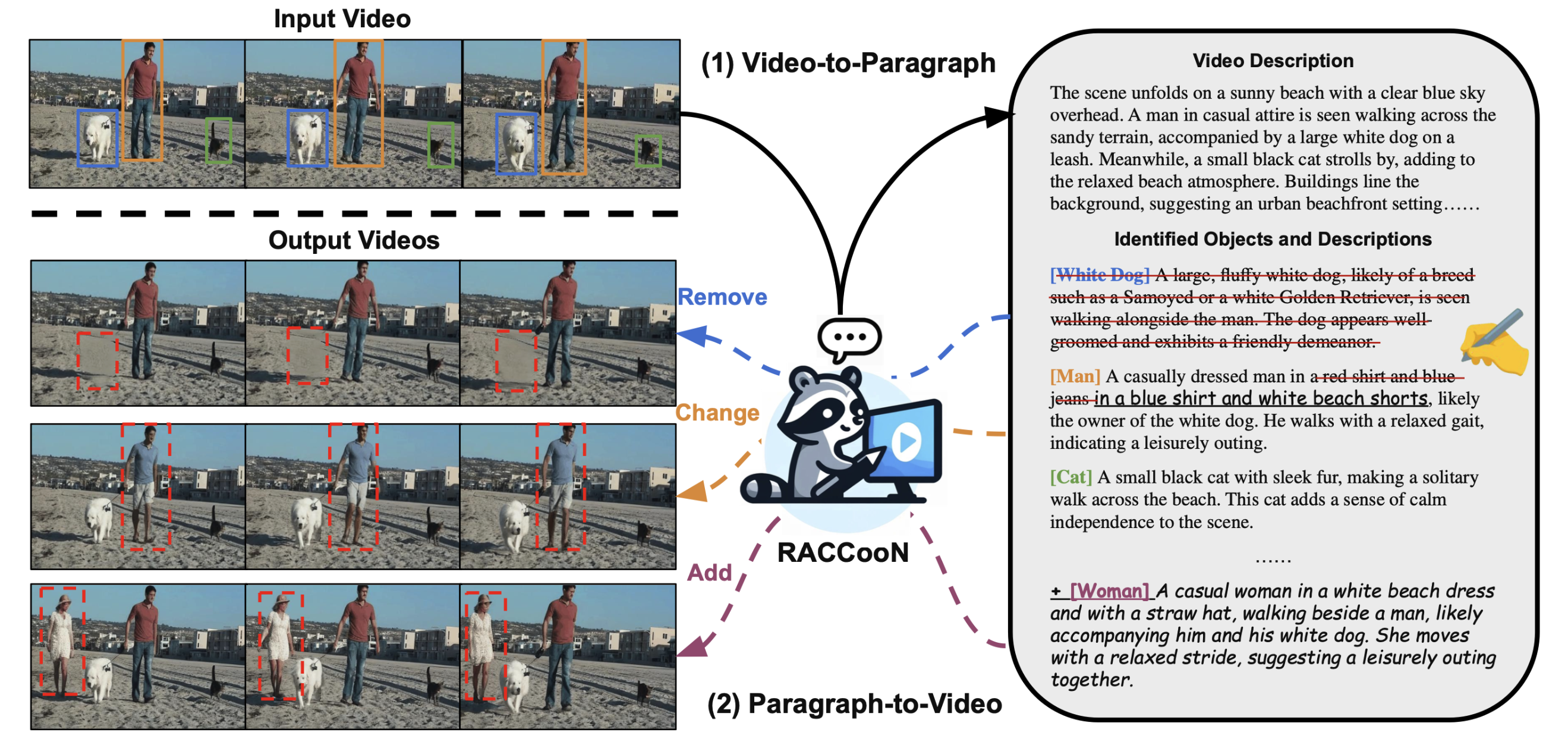

- RACCooN: Diverse Video Editing via MLLM Auto-generated Paragraphs (EMNLP 2025)

- 4D-LRM: Large Reconstruction Model for 4D Object Reconstruction (NeurIPS 2025)

- Video-MSG: Multimodal Planning & Noise Initialization for Controllable Video Generation (ArXiv 2025)

Multimodal Reasoning Benchmarks

- STAR: Neuro-Symbolic Real-world Video Reasoning Benchmark (NeurIPS 2021)

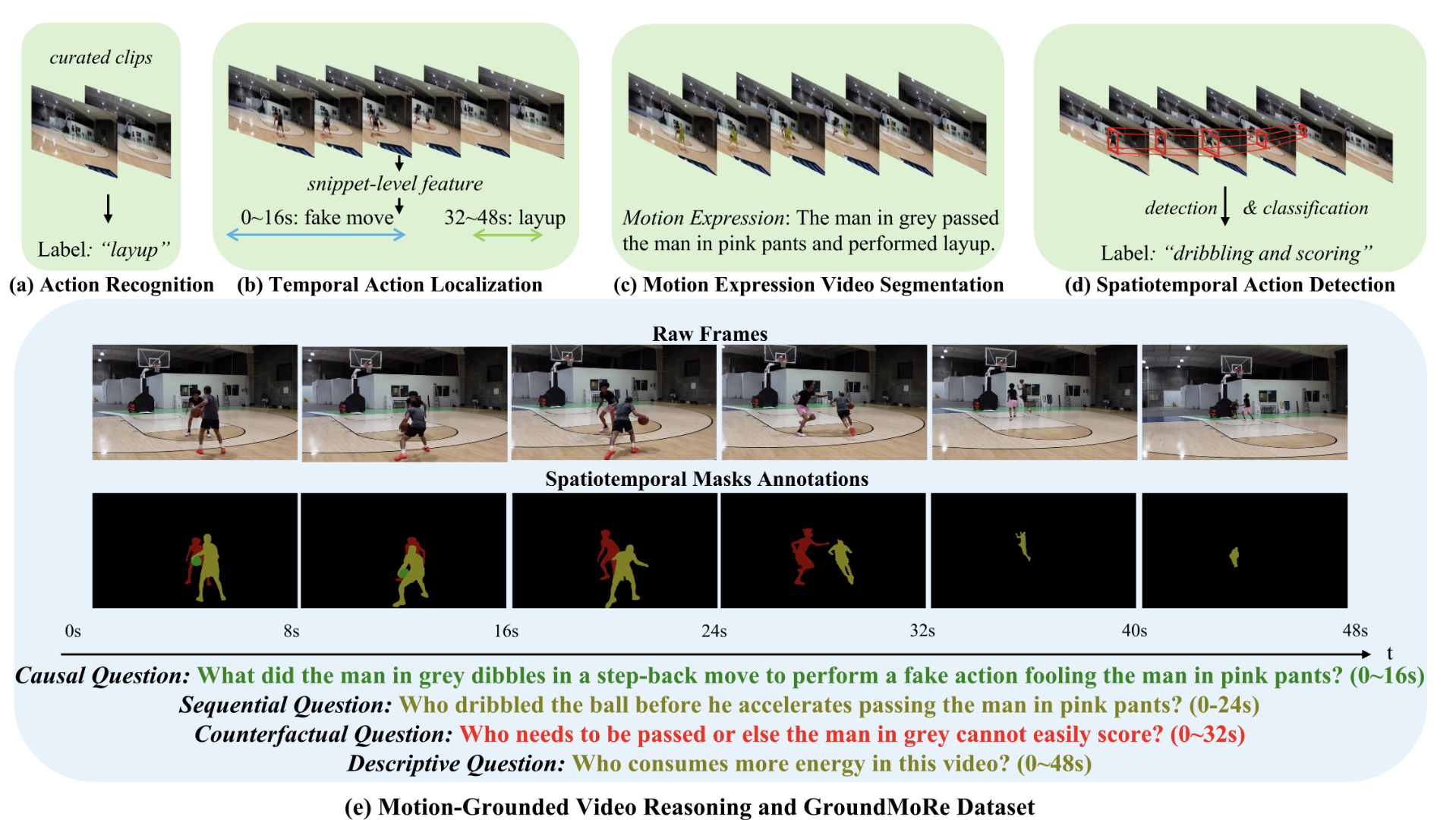

- GroundMoRe: Video Reasoning Segmentation Benchmark (CVPR 2025)

- SciVideoBench: Scientific Video Reasoning Benchmark (ArXiv 2025)

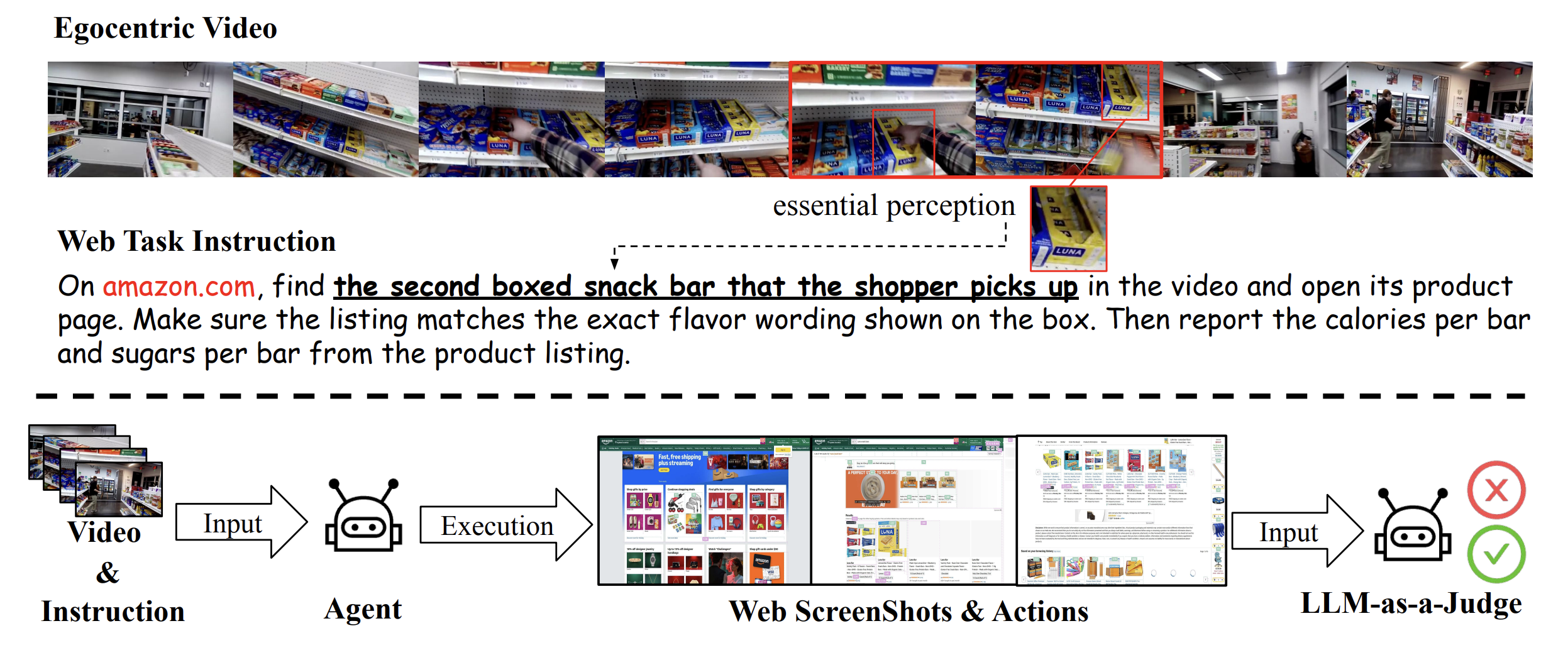

- Ego2Web: Egocentric Video + Web Agent Benchmark (CVPR 2026)

I’m always happy to share ideas / discussion / collaborate with diverse backgrounds people, feel free to reach out.

Find me here: shoubin -atsign- cs . unc . edu

🔥 News

- 2026.02: 🇺🇸 2 paper accepted to CVPR 2026.

- 2025.10: 🎖️ SciVideoBench won the $\color{red} {best}$ $\color{red} {benchmark}$ $\color{red} {paper}$ at ICCV 25 KnowledgeMR workshop

- 2025.09: 🇺🇸 1 paper accepted to ICMI 2025.

- 2025.09: 🇺🇸 1 paper accepted to NeurIPS 2025.

- 2025.08: 🇨🇳 3 papers accepted to EMNLP 2025

- 2025.06: 🌊 1 papers accepted to ICCV 2025.

- 2025.05: 🧠 Summer intern at Google Deepmind.

- 2025.02: 💬 Gave an invited talk at Twelve Labs.

- 2025.02: 👀 2 papers accepted to CVPR 2025.

- 2025.01: 🇸🇬 3 papers accepted to ICLR 2025.

- 2024.09: 📓 1 paper accepted to EMNLP 2024.

- 2024.07: 📹 1 paper accepted to ACMMM 2024.

- 2024.06: 💬 Gave an invited talk at Google.

- 2024.05: 🎬 Summer intern at Adobe.

- 2023.09: ⛓️ 1 paper accepted to NeurIPS 2023.

- 2023.07: 🦴 1 paper accepted to IEEE TCSVT.

- 2023.05: 🌞 Summer intern at Amazon.

- 2022.09: ⛪️ Join UNC-CH MURGe-Lab .

- 2022.06: 🎓 Graduate from Shanghai Jiao Tong University (outstanding graduates).

- 2021.10: 🌟 1 paper accepted to NeurIPS 2021.

📝 Pre-print (*: equal contribution/co-first author)

Shoubin Yu*, Yue Zhang*, Zun Wang, Jaehong Yoon, Huaxiu Yao, Mingyu Ding, Mohit Bansal

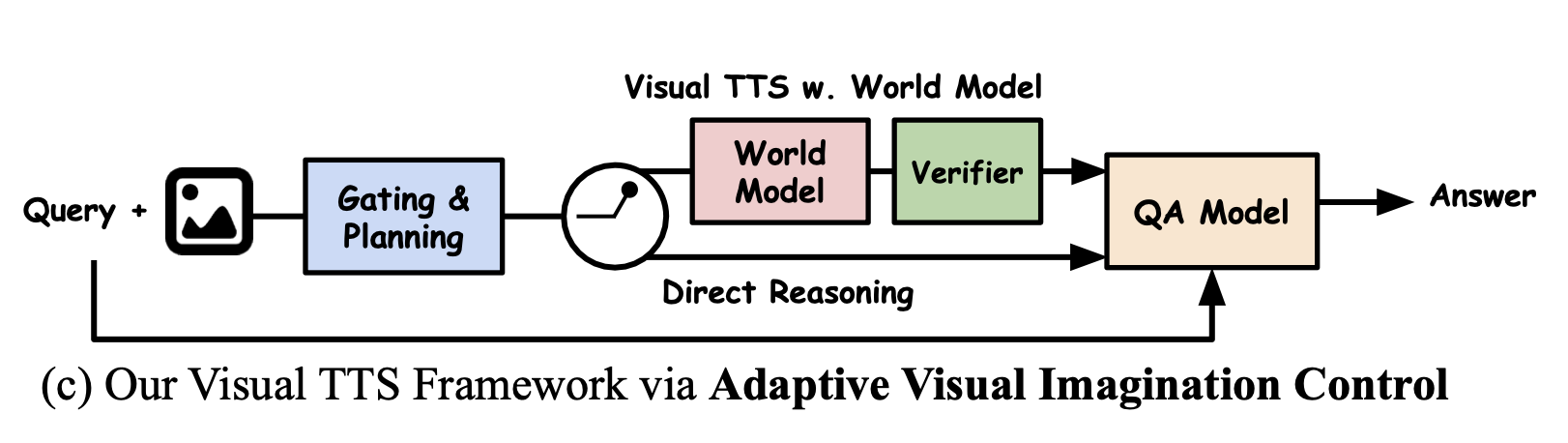

- We present an analysis-driven framework (AVIC) for adaptive test-time scaling via world model imagination in visual spatial reasoning.

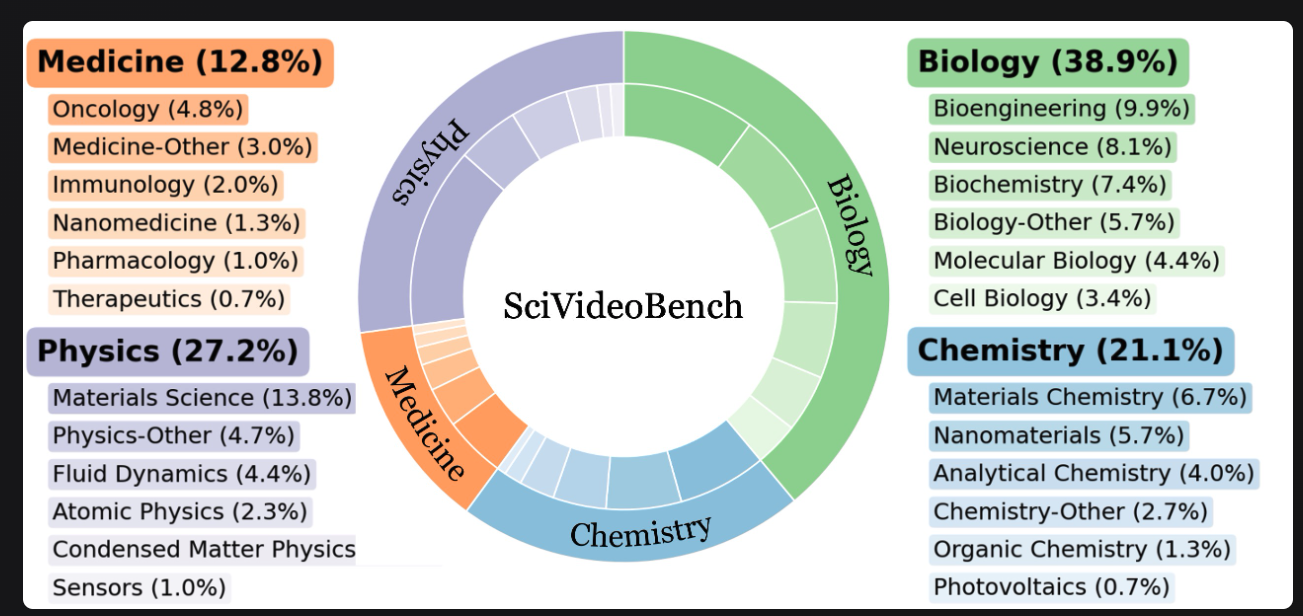

SciVideoBench: Benchmarking Scientific Video Reasoning in Large Multimodal Models

Andong Deng, Taojiannan Yang, Shoubin Yu, Lincoln Spencer, Mohit Bansal, Chen Chen, Serena Yeung-Levy, Xiaohan Wang

- we introduce SciVideoBench, a rigorous benchmark specifically designed to assess advanced video reasoning in scientific contexts.

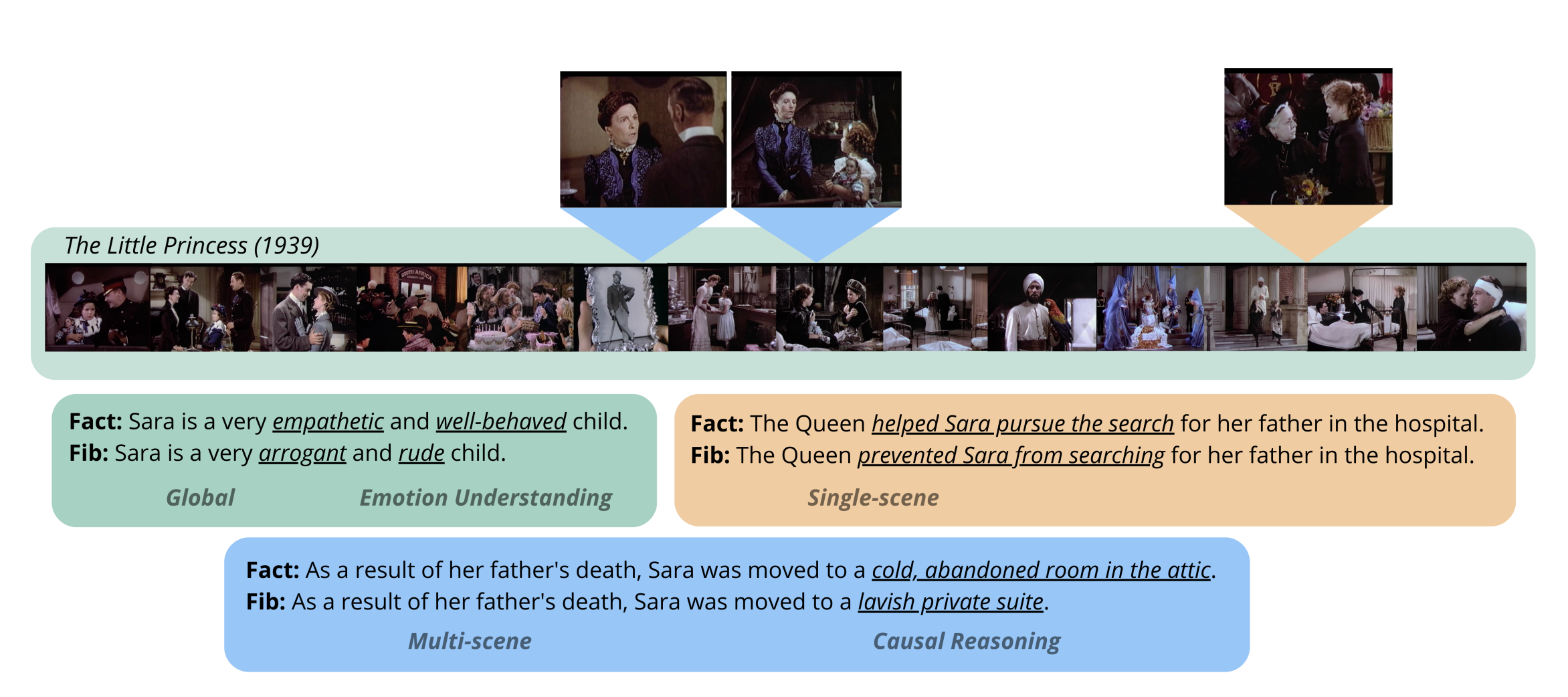

Movie Facts and Fibs (MF2): A Benchmark for Long Movie Understanding

Emmanouil Zaranis, António Farinhas, Saul Santos, Beatriz Canaverde,…Shoubin Yu, et al.

- We propose MF2, a new benchmark for evaluating whether models can comprehend, consolidate, and recall key narrative information from full-length movies

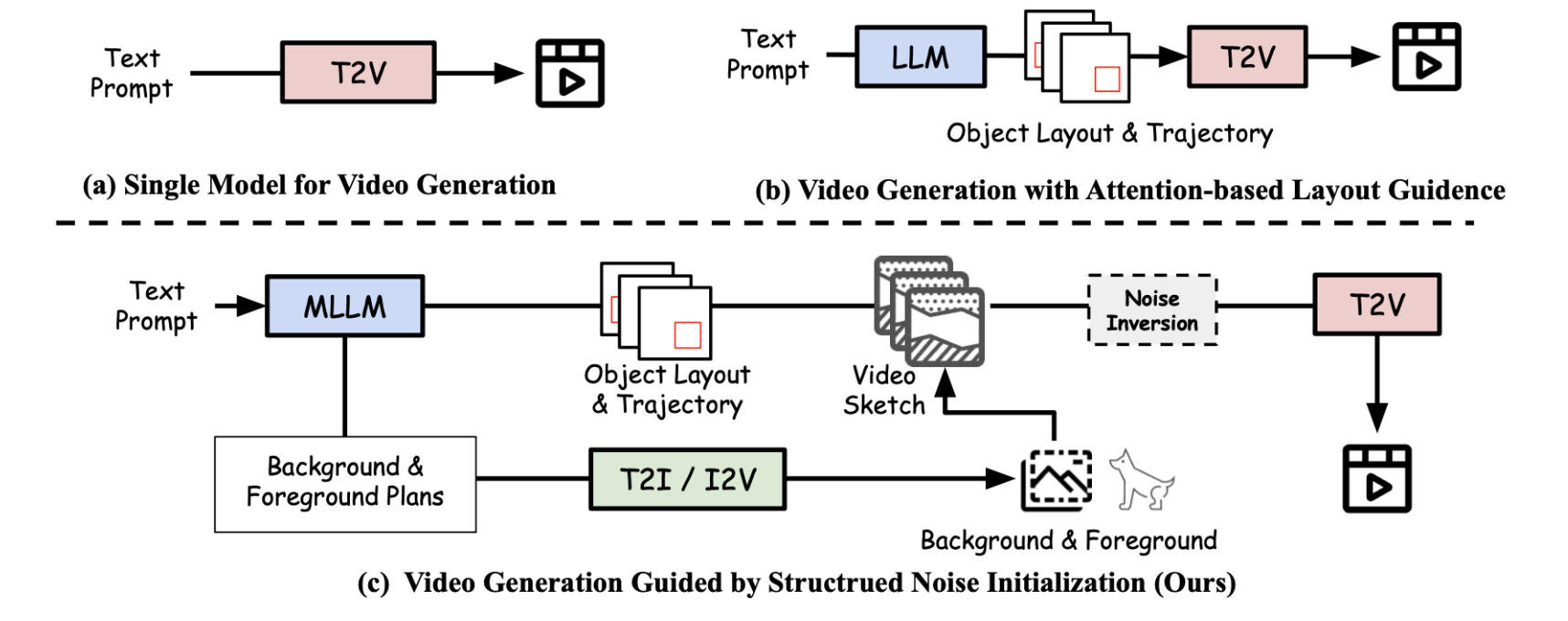

Jialu Li*, Shoubin Yu*, Han Lin*, Jaemin Cho, Jaehong Yoon, Mohit Bansal

- We propose Video-MSG, a training-free guidance method for T2V generation with multimodal planning and structured noise.

📝 Publications

Ego2Web: A Web Agent Benchmark Grounded on Egocentric Videos

Shoubin Yu, Lei Shu, Antoine Yang, Yao Fu, Srinivas Sunkara, Maria Wang, Jindong Chen, Mohit Bansal, Boqing Gong

Code (coming soon) | Project Page (coming soon)

- We introduce Ego2Web, the first benchmark designed to bridge egocentric video perception and multimodal web agent execution.

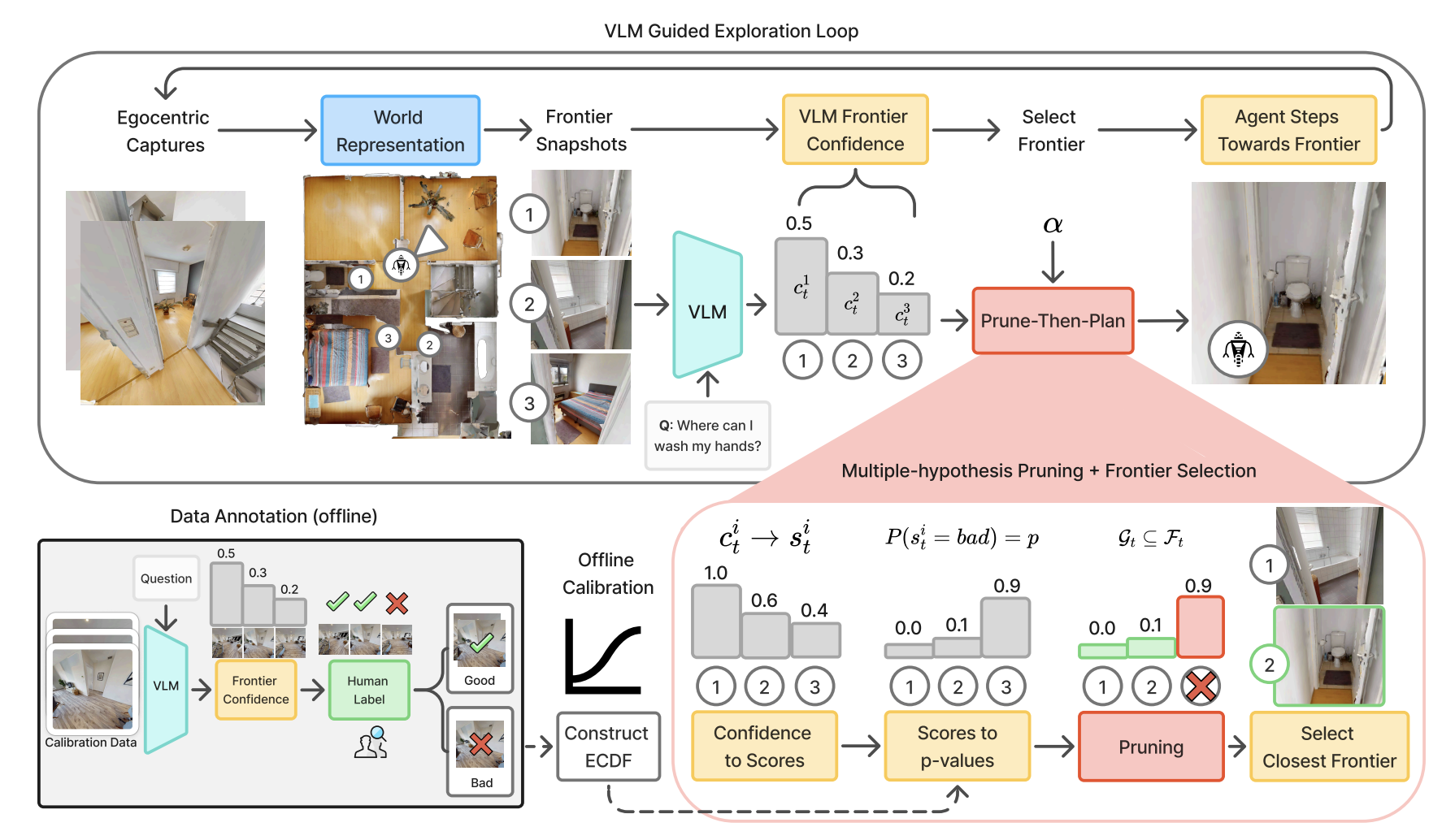

Noah Frahm, Prakrut Patel, Yue Zhang, Shoubin Yu, Mohit Bansal, Roni Sengupta

- we present Prune-Then-Plan, a method that enables AI agents to better explore 3D scenes for embodied QA.

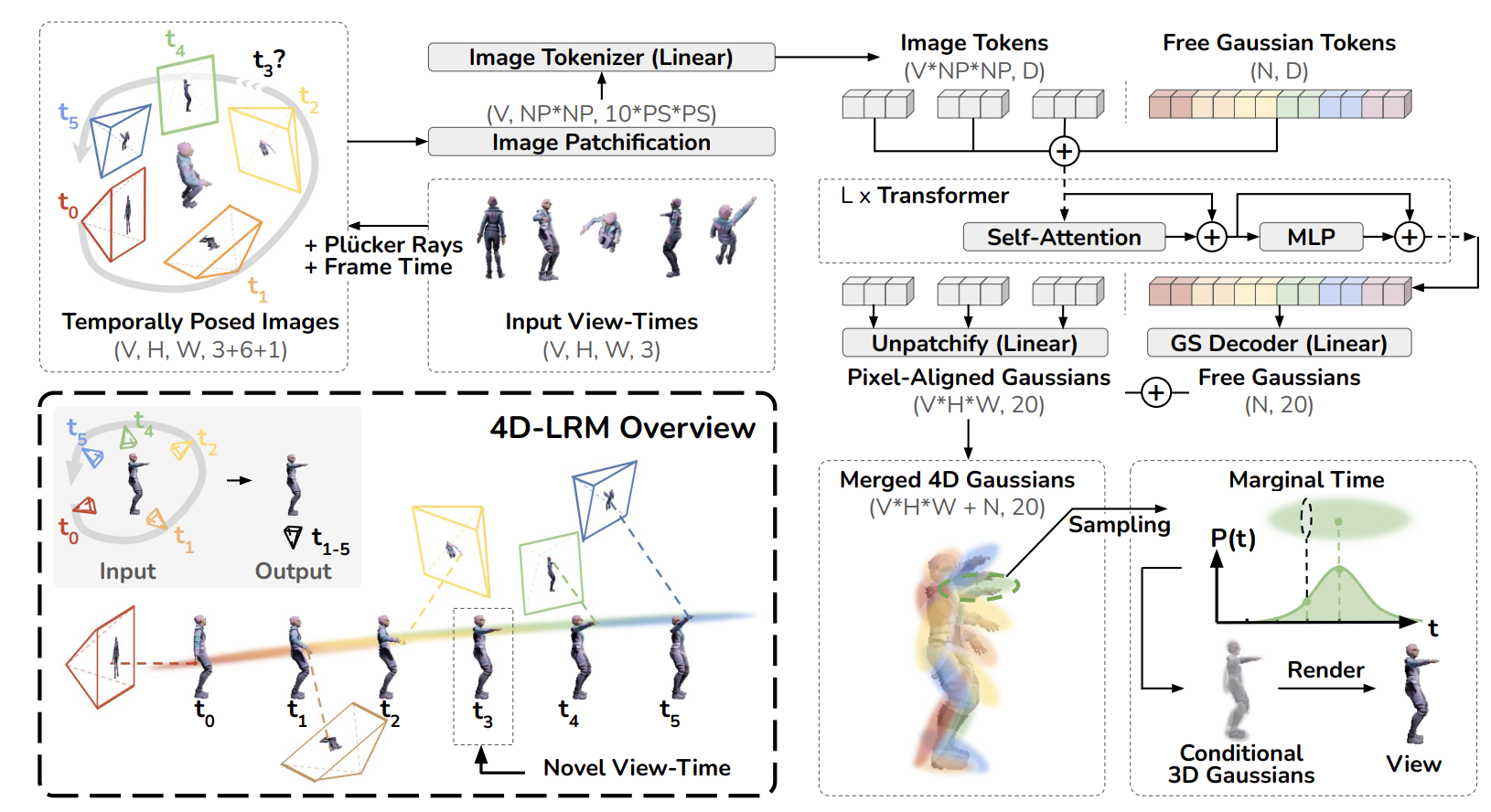

4D-LRM: Large Space-Time Reconstruction Model From and To Any View at Any Time

Ziqiao Ma, Xuweiyi Chen, Shoubin Yu, Sai Bi, Kai Zhang, Chen Ziwen, Sihan Xu, Jianing Yang, Zexiang Xu, Kalyan Sunkavalli, Mohit Bansal, Joyce Chai, Hao Tan

- We introduce 4D-LRM, a data-driven 4D reconstruction model that takes sparse input views at any time and renders arbitrary novel view-time combinations.

RACCooN: Remove, Add, and Change Video Content with Auto-Generated Narratives

Jaehong Yoon*, Shoubin Yu*, Mohit Bansal

- We present RACCooN, a versatile and user-friendly video-to-paragraph-to-video framework, enables users to remove, add, or change video content via updating auto-generated narratives.

Ziyang Wang*, Jaehong Yoon*, Shoubin Yu, Md Mohaiminul Islam, Gedas Bertasius, Mohit Bansal

- We present VIDEO-RTS, a new approach to improve video reasoning capability with drastically improved data efficiency by combining data-efficient RL with a video-adaptive test-time scaling (TTS) strategy.

MEXA: Towards General Multimodal Reasoning with Dynamic Multi-Expert Aggregation

Shoubin Yu*, Yue Zhang*, Ziyang Wang, Jaehong Yoon, Mohit Bansal

- We introduce MEXA, a general and training-free multimodal reasoning framework via dynamic multi-expert skill selection, aggregation and deep reasoning.

VEGGIE: Instructional Editing and Reasoning of Video Concepts with Grounded Generation

Shoubin Yu*, Difan Liu*, Ziqiao Ma*, Yicong Hong, Yang Zhou, Hao Tan, Joyce Chai, Mohit Bansal

- We propose VEGGIE, a unified and versatile video generative model that handles various tasks for both video concept grounding and editing according to user instructions.

Motion-Grounded Video Reasoning: Understanding and Perceiving Motion at Pixel Level

Andong Deng, Tongjia Chen, Shoubin Yu, Taojiannan Yang, Lincoln Spencer, Yapeng Tian, Ajmal Saeed Mian, Mohit Bansal, Chen Chen

- We present GroundMoRe, a new benchmark for novel Motion-Grounded Video Reasoning, designed to assess multimodal models’ reasoning and perception capabilities for motion understanding.

VideoTree: Adaptive Tree-based Video Representation for LLM Reasoning on Long Videos

Ziyang Wang*, Shoubin Yu*, Elias Stengel-Eskin*, Jaehong Yoon, Feng Cheng, Gedas Bertasius, Mohit Bansal

- We present VideoTree, an adaptive tree-based video presentation/prompting with simple visual clustering for long video reasoning with LLM.

SAFREE: Train-free And Adaptive Guard For Safe Text-to-Image And Video Generation

Jaehong Yoon*, Shoubin Yu*, Vaidehi Patil, Huaxiu Yao, Mohit Bansal

- We propose SAFREE, a concept guard that can zero transfer to any visual diffusion models for safe generation.

CREMA: Generalizable and Efficient Video-Language Reasoning via Multimodal Modular Fusion

Shoubin Yu*, Jaehong Yoon*, Mohit Bansal

- We present CREMA, an efficient & modular modality-fusion framework for injecting any new modality into video reasoning.

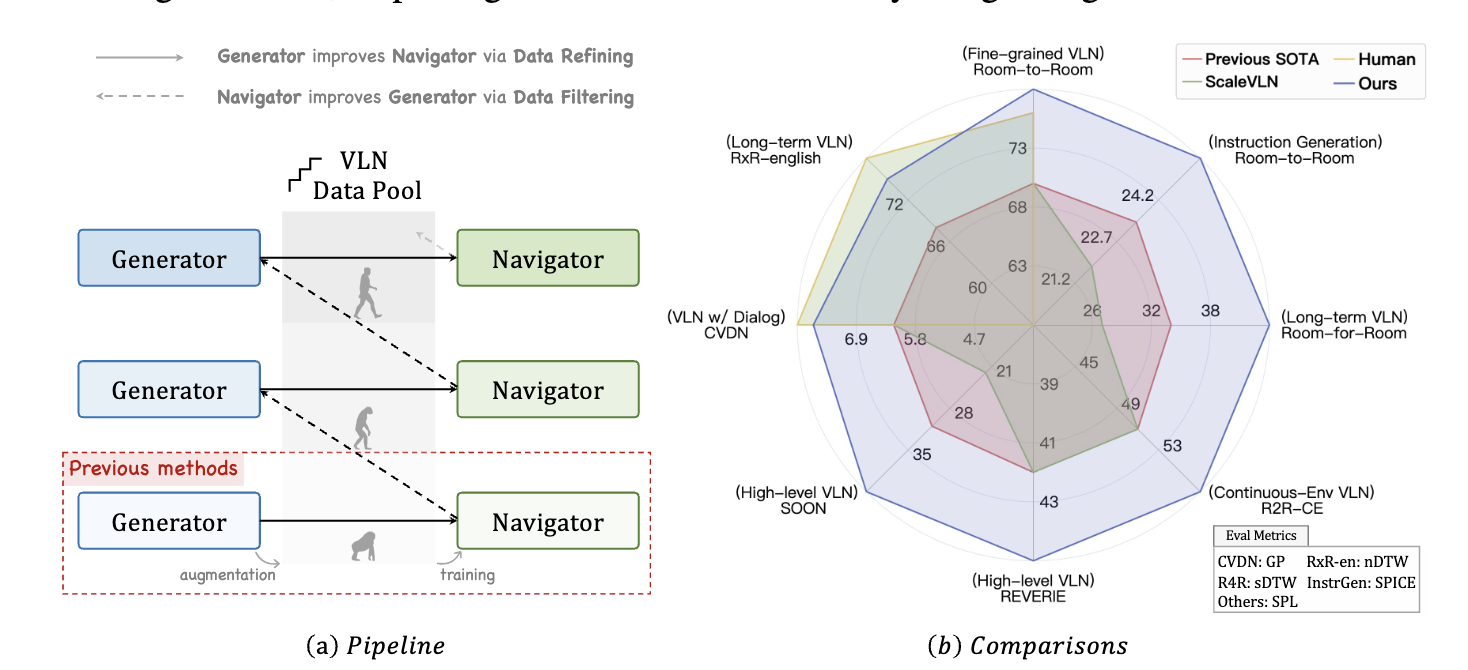

Bootstrapping Language-guided Navigation Learning with Self-refining Data Flywheel

Zun Wang, Jialu Li, Yicong Hong, Songze Li, Kunchang Li, Shoubin Yu, Yi Wang, Yu Qiao, Yali Wang, Mohit Bansal, Limin Wang

- We present a Self-Refining Data Flywheel strategy for VLN, surpassing/approaching human performance on several benchmarks.

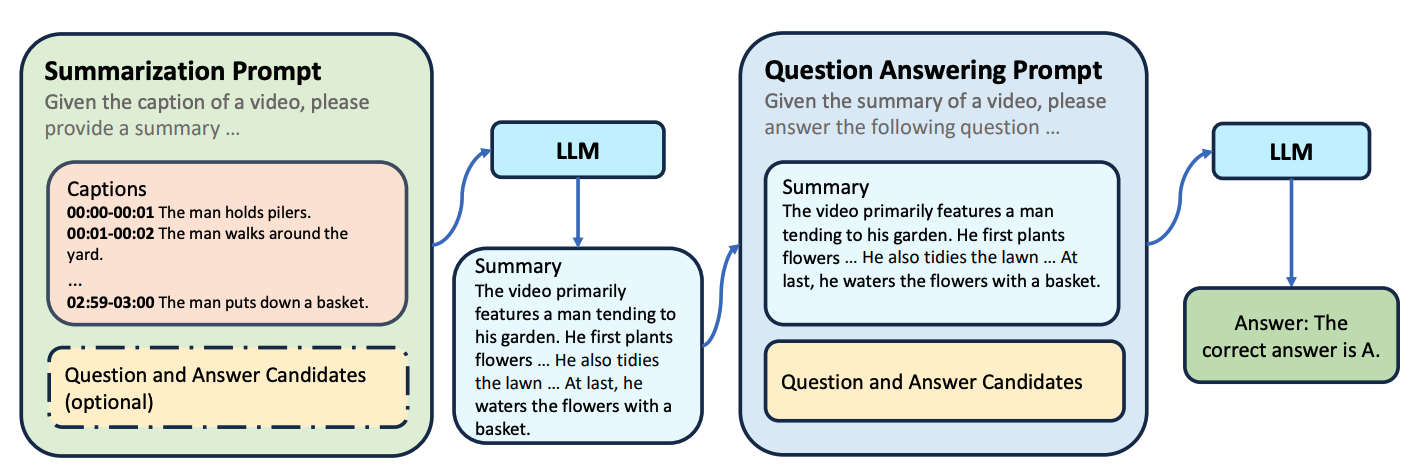

A Simple LLM Framework for Long-Range Video Question-Answering

Ce Zhang, Taixi Lu, Md Mohaiminul Islam, Ziyang Wang, Shoubin Yu, Mohit Bansal, Gedas Bertasius

- We present LLoVi, a simple yet effective framework with LLM for long-range video question-answering.

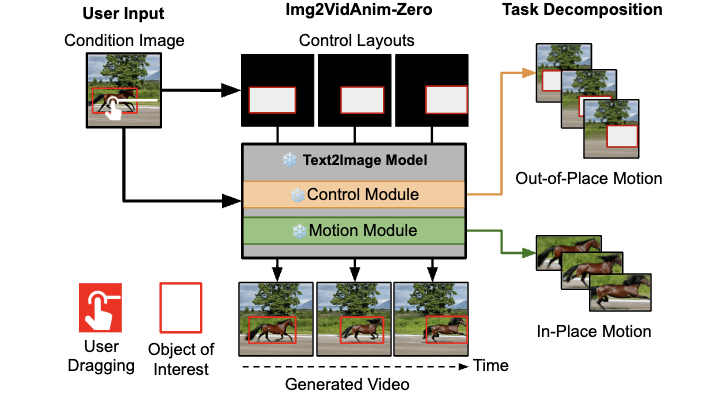

Zero-Shot Controllable Image-to-Video Animation via Motion Decomposition

Shoubin Yu, Jacob Zhiyuan Fang, Skyler Zheng, Gunnar Sigurdsson, Vicente Ordonez, Robinson Piramuthu, Mohit Bansal

- We present IVA-0, a Image-to-Video animator, enables precise control from users through in-place and out-of-place motion decomposition.

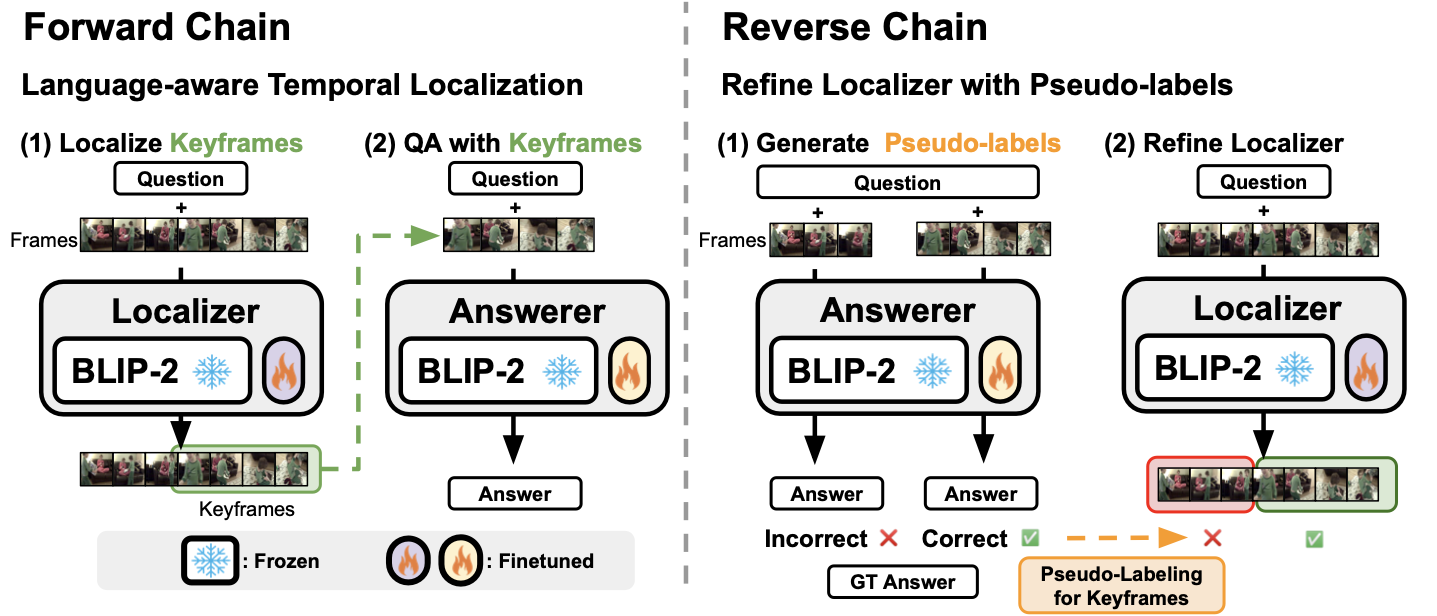

Self-Chained Image-Language Model for Video Localization and Question Answering

Shoubin Yu, Jaemin Cho, Prateek Yadav, Mohit Bansal

- We propose SeViLA, which self-chained BLIP-2 for 2-stage video question-answering (localization + QA) & refine localization with QA feedback.

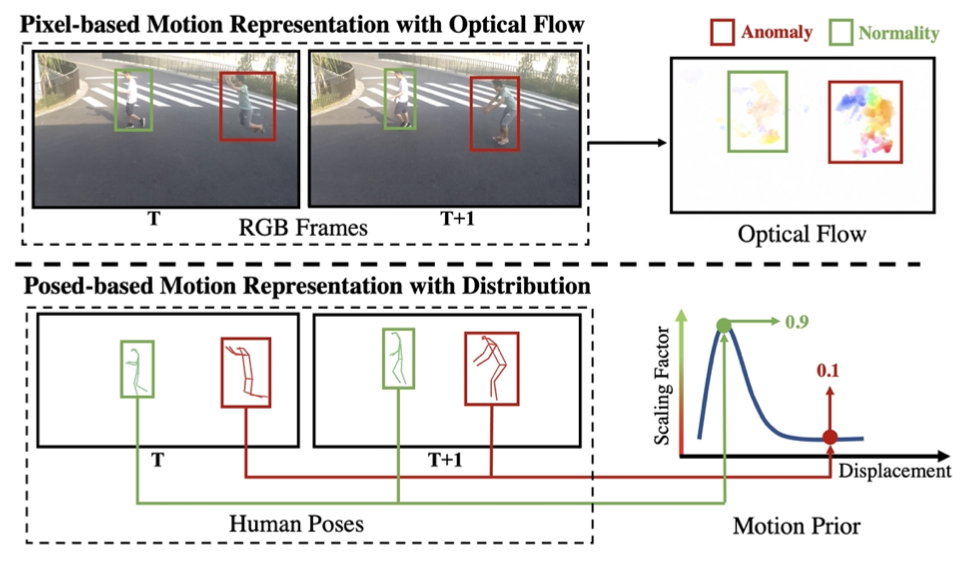

Regularity Learning via Explicit Distribution Modeling for Skeletal Video Anomaly Detection

Shoubin Yu, Zhongyin Zhao, Haoshu Fang, Andong Deng, Haisheng Su, Dongliang Wang, Weihao Gan, Cewu Lu, Wei Wu

- We propose MoPRL, a transformer-based model incorporated with skeletal motion prior for efficient video anomaly detection.

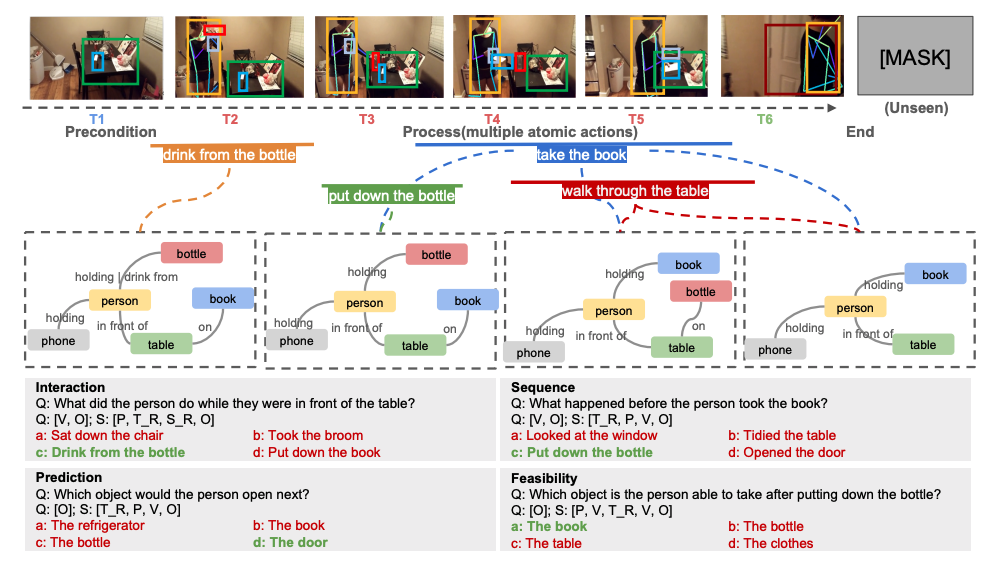

STAR: A Benchmark for Situated Reasoning in Real-World Videos

Bo Wu, Shoubin Yu, Zhenfang Chen, Joshua B. Tenenbaum, Chuang Gan

- We propose STAR, a benchmark for neural-symbolic video reasoning in real-world scenes.

🧐 Service & Talk

- 2025.02: Invited talk at Twelve Labs.

- 2024.06: Invited talk at Google.

- Conference reviewer: CVPR, ICCV, ECCV, NeurIPS, ICLR, ICML, AISTATS, ARR (ACL, EMNLP, CoNLL, NACCAL, EACL), AAAI, IJCAI

- Journal reviewer: IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), IEEE Transactions on Circuits and Systems for Video Technology (TCSVT), IEEE Transactions on Neural Networks and Learning Systems (TNNLS), IEEE Transactions on Multimedia (TMM)

📖 Educations

- 2022.09 - Present

- The University of North Carolina at Chapel Hill

- Computer Science, Ph.D.

- 2017.09 - 2022.06

- Shanghai Jiao Tong University

- Information Security, B.Eng.

💻 Internships

- 2026.05 - 2026.08, Student Researcher

- 2025.05 - 2025.11, Student Researcher

- 2024.05 - 2025.03, Research Scientist Intern

- 2023.05 - 2023.11, Research Scientist Intern